FlexHDR: Modelling Alignment and

Exposure Uncertainties for Flexible HDR Imaging

IEEE Transactions On Image Processing 2022

Sibi Catley-Chandar Thomas Tanay Lucas Vandroux Aleš Leonardis Eduardo Pérez-Pellitero

Huawei Noah’s Ark Lab

Queen Mary, University of London

Abstract

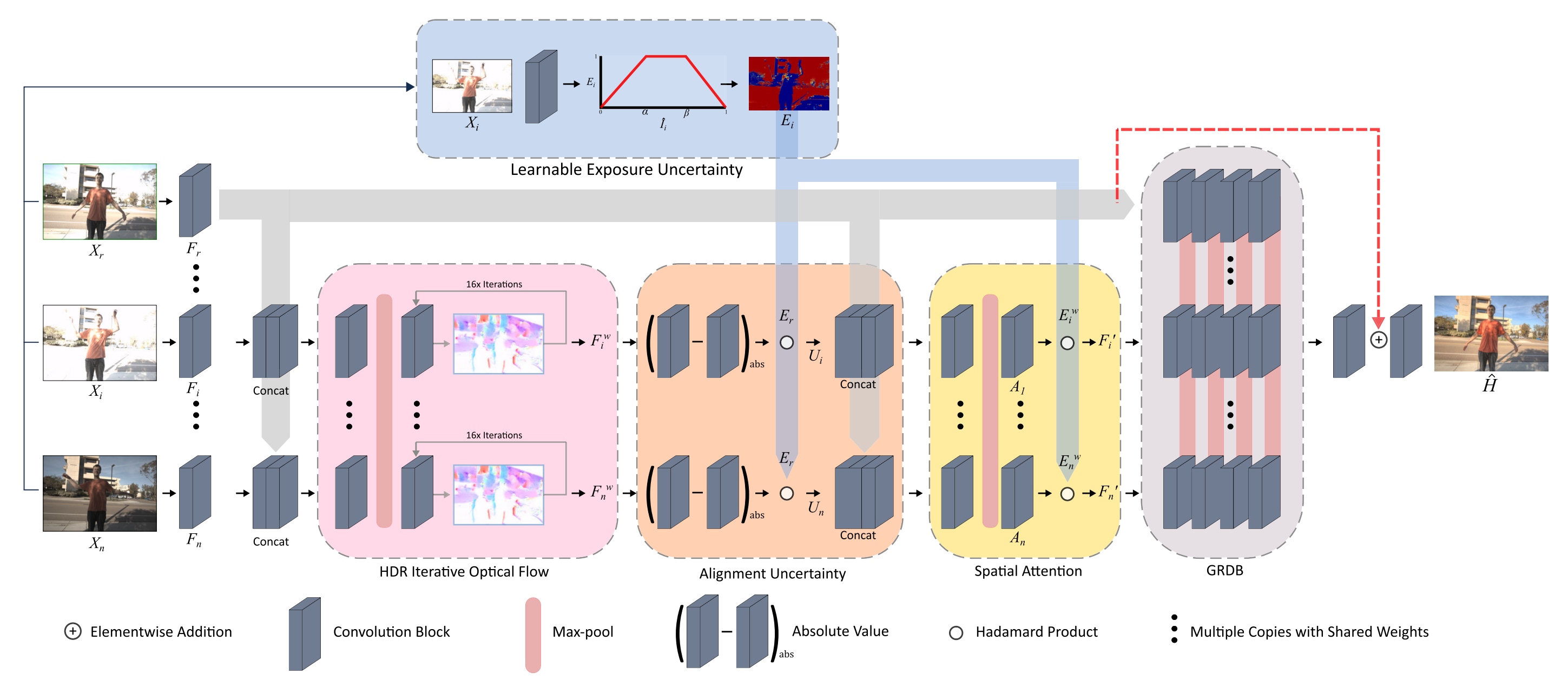

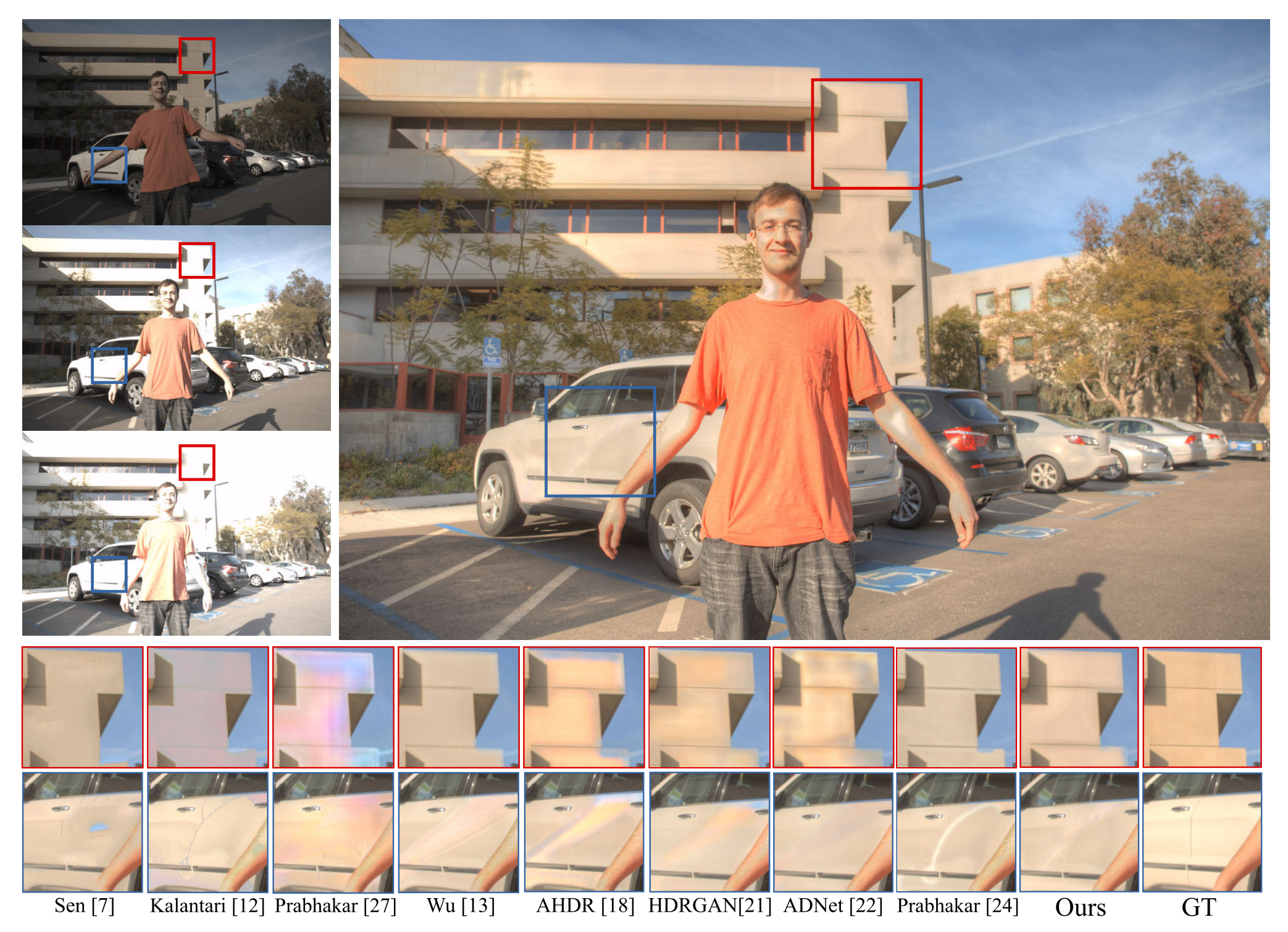

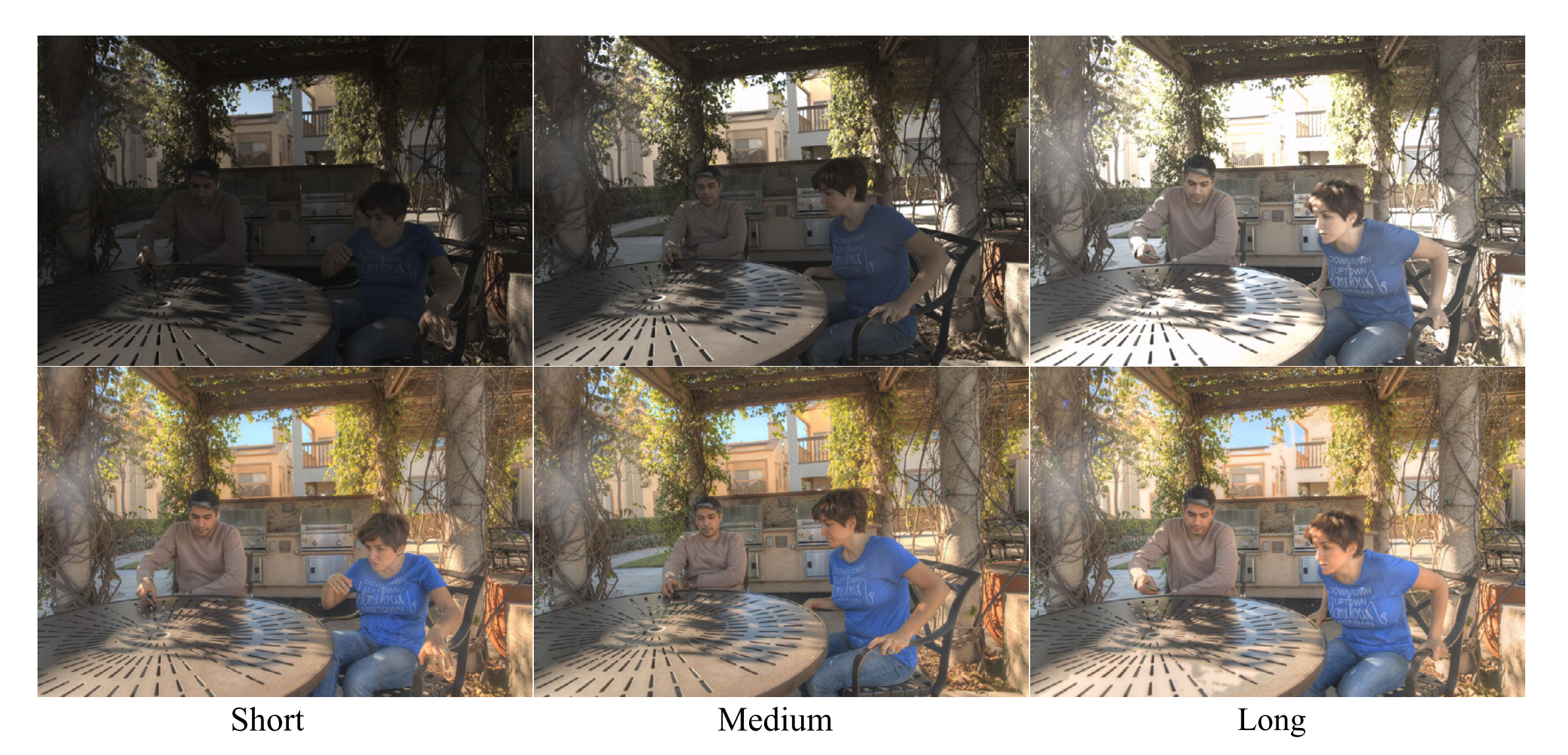

High dynamic range (HDR) imaging is of fundamental importance in modern digital photography pipelines and used to produce a high-quality photograph with well exposed regions despite varying illumination across the image. This is typically achieved by merging multiple low dynamic range (LDR) images taken at different exposures. However, over-exposed regions and misalignment errors due to poorly compensated motion result in artefacts such as ghosting. In this paper, we present a new HDR imaging technique that specifically models alignment and exposure uncertainties to produce high quality HDR results. We introduce a strategy that learns to jointly align and assess the alignment and exposure reliability using an HDR-aware, uncertainty-driven attention map that robustly merges the frames into a single high quality HDR image. Further, we introduce a progressive, multi-stage image fusion approach that can flexibly merge any number of LDR images in a permutation-invariant manner. Experimental results show our method can produce better quality HDR images with up to 1.1dB PSNR improvement to the state-of-the-art, and subjective improvements in terms of better detail, colours, and fewer artefacts.

Overview

Results

BibTeX Citation

@article{catleychandar2022,

author={Catley-Chandar, Sibi and Tanay, Thomas and Vandroux, Lucas and Leonardis, Ales and Slabaugh, Gregory and P\'erez-Pellitero, Eduardo},

journal={IEEE Transactions on Image Processing},

title={Flex{HDR}: Modeling Alignment and Exposure Uncertainties for Flexible {HDR} Imaging},

year={2022},

volume={31},

}